# 40 | Pruning Memes - "Algo Stables Are Dead"

Watching a Forest Going Up in Flames and Blaming Oxygen Won't Help Us

Four-engine aircrafts might soon be a thing of the past. The era that started with the very first commercial jet airliner, the Havilland Comet, seems destined to the history books with the termination of the A380’s and glorious 747’s production lines. Safety was the most obvious concern behind the choice of a four-engine plane. With four engines rather than two, common sense might suggest, the possibility to remain without power would have been drastically reduced. Safety, on planes, should always come first. The Extended-range Twin-engine Operational Performance Standards (ETOPS) introduced during the ‘80s changed the scene: enough evidence was collected to justify the use of twinjets also for transoceanic flights. Fascinatingly, the push towards two-engine planes was actually driven by safety (beyond cost) concerns. Even a single engine failure could be disruptive for a plane, and unsurprisingly the possibility of engine failure is higher with four rather than two turbines. Two engines would also mean less fuel, less weight, and less complexity in general. When you are dealing with operational risk, less is more.

The answer to the engine optimisation problem depends on a set of input variables. Distance, weight, cost of fuel, and others. Two engines could be the answer within a certain input space, but I am sure there are other spaces where the answer is four, eight, or thirty. There is not a number of engines, however, that can completely eliminate the risk of catastrophic failure, and we need to rely on available evidence and statistical inference. Even when we gamble with our own lives. Irreducible uncertainty is everywhere, all the time. What we can do, however, is avoiding blaming a one-engine plane if we stuff it with the number of people that should travel on the A380. The blame game is a tricky one to play.

Hey, Don’t Blame the Algorithm, Degen

If you would have asked me a couple of months ago who would have been the you-know-who to privately gossiped on during my first Palm Beach escapade, I would have undoubtedly answered Donald Trump. And I would have been mistaken. During the recent Blockworks-organised Permissionless event, that empty place under the spotlight was reserved for Kwon Do-Hyung, more commonly known as Do Kwon, founder and defender of the Terra ecosystem. For harnessing the power of the algorithm, his name would have been wiped away from history, and his tools of dominion and destruction hidden for ever under the sand by the same journalists who had branded him as a visionary until few minutes earlier.

In the absence of Do, it was its alleged creation — or adoption, the algorithmic stablecoin, to remain to take the blame. In hindsight it seemed obvious to literally everyone (as it always does) how the design of Terra’s USD-pegged stable was always destined to a catastrophic debacle. Other steps came natural: firstly from algorithmic stablecoin Terra was rebranded just a Ponzi scheme, and subsequently the same definition was extended to every algorithmic monetary design.

But what is an algorithmic stablecoin? In its purest definition, an algorithmic stablecoin is a blockchain-enabled (and transferrable) unit whose value, when expressed in relation to another specific unit, is kept stable through the use of some sort of automated mechanism. It would be fair to say that, given the (locally) chaotic nature of human behaviour, every pegged asset needs to resort to some sort of stabilisation mechanism — algorithmic or not. The term algorithmic, then, simply identifies those designs that have opted for programmatic, rather than manual, stabilisation.

With time, however, the term has taken other connotations. In the current crypto discourse the term algorithmic has come to represent a construct where value is maintained by some sort of flow management mechanism that compensates all buying and selling pressures. This revamped definition is not only improper when referred to algorithmic stablecoins in general, but also redundant in not pointing out the distinctive characteristics of such design choices.

There Is No Superior Stablecoin Design, But For Sure There Are Bad Ones

If we swallow the laziness pill and discard algorithmic stablecoins as inferior design choices, we should also be ready to rank stablecoin designs along a set of metrics. Can we do it?

First, a super-fast recap of three benchmark stable designs: $DAI, $FRAX, $UST. To the designers and core teams, please forgive me. Those are tailored for the occasion so better not tot skip.

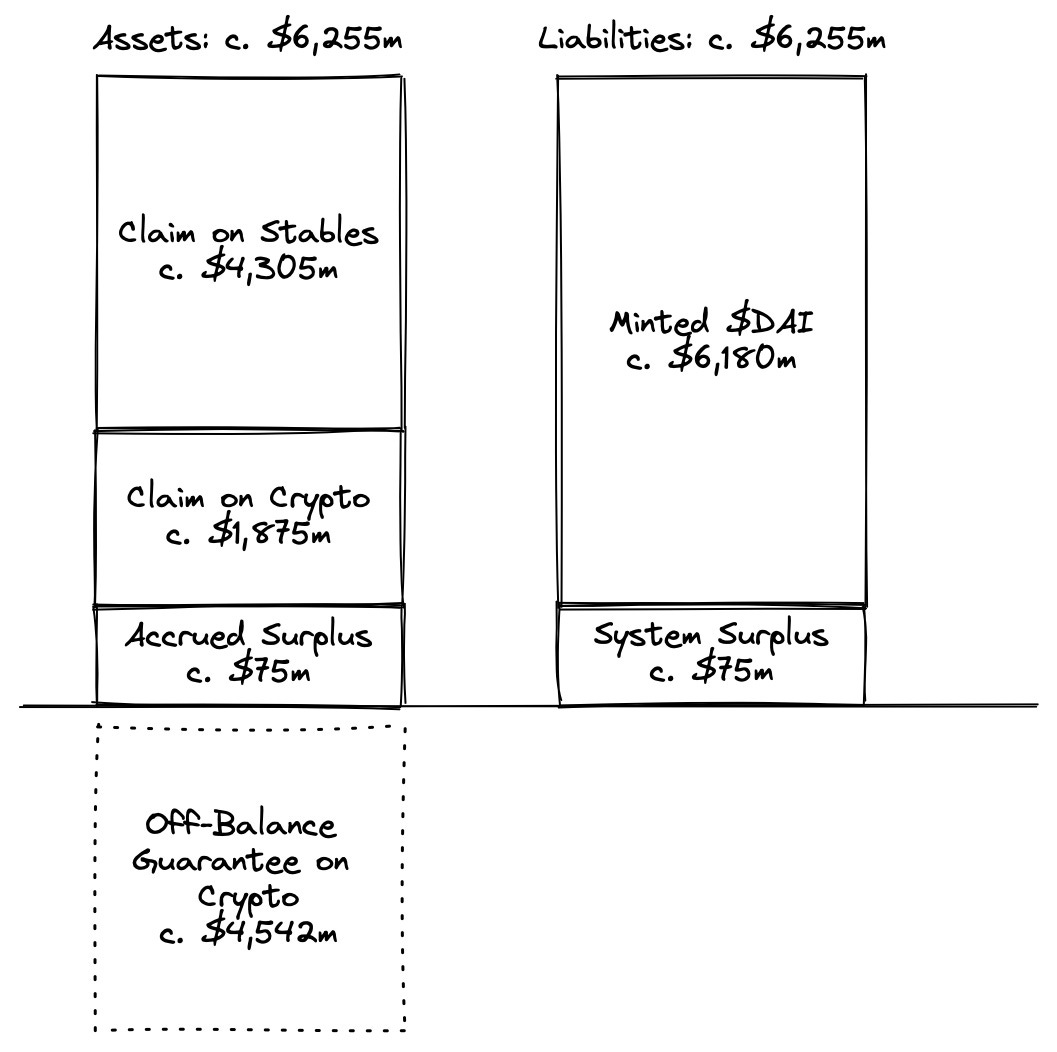

$DAI → MakerDAO mints $DAI mainly when holders of whitelisted collateral pledge such collateral to permissionless vaults — including an element of over-collateralisation. The minting mechanism alone, however, doesn’t protect from fluctuations in value (when measured in USD terms) across DEXs and CEXs. In order to peg $DAI to USD MakerDAO opted for a combination of (a) interest rate policy (through the Dai Savings Rate — currently at 0.01%) and (b) permissionless open market operations — called Price Stability Mechanism. Channel (b) is relevant to our discussion: MakerDAO (subject to a maximum ceiling) commits to swap a unit of $USD(C) with $DAI on a 1:1 ratio thus allowing arbitrageurs to profit in case $DAI trades above the peg. This mechanism is algorithmic in nature, and intends to absorb excess system demand for $DAI by diluting the circulating monetary supply — even following the Terra crisis there are c. $3.5b sitting in Maker’s PSM vaults. The system proved to be successful in limiting $DAI’s upside vs. peg, while the downside is managed mainly by over-collateralisation.

In accounting discourse, Maker’s over-collateralisation represents an off-balance guarantee that exceeds what has been minted by the protocol. Maker has indirect control over this value but doesn’t own it — à la Fei. The accounting effect of such guarantee is to sustain the fair valuation of Maker’s claim on crypto collateral and, indirectly, the fair valuation of its liabilities — the value of $DAI. If, for example, the off-balance guarantee would fall beyond a certain (market-driven) level and not replenished, the accounting effect would be ultimately a reduction in the fair valuation of $DAI vs. the assets on-balance — an effect that could be only partially counterbalanced by aggressive interest rate policy.

$FRAX → $FRAX is another Ethereum-based pegged stablecoin. The creation or destruction of monetary base is entirely left to arbitrageurs who can mint new $FRAX at committed 1:1 when its market value is instead above the peg — thus clipping a profit, or buy it in the open market and burn it receiving value on a committed 1:1 ratio when $FRAX market value is below the peg. $FRAX monetary creation is permissionless, as it is for $DAI, but rather than being over-collateralised on a pool of exogenous asset it is actually under-collateralised. The value backing each $FRAX unit, in other words, is composed at c. 90% (as of today) by external assets and the remainder by $FXS — which is the system’s equity token.

$UST → A lot has been written on Terra’s experiment (including here on DR) and I won’t repeat myself. Terra’s mechanics resemble that of Frax', but upside down — rather than having a 90/10 ratio of exogenous/endogenous collateral Terra flipped that ratio to 10/90. There are several other design differences we won’t comment on here.

Although in trying to identify how good is one model vs. another we should take into consideration their respective use cases, we can assume here for simplicity that all stablecoins want to do exactly the same things for their holders. With this assumption, answering how good is a stablecoin design is fundamentally a question about its:

Velocity: how easy it is for a stable to scale up

Solvency: how reliable is the value backing the currency — i.e. its peg

Liquidity: how robust is the peg against short-term shocks

(1) Velocity → Currency products have scope economies, since big ones benefit from great externalities — look at the US dollar. Designing a currency that can scale fast and resist to market shocks is the Holy Grail of monetary mechanism design. The thing about the Holy Grail is that nobody has ever seen it, no one can truly say how it looks like, and most probably it doesn’t even exist. In order to promote ultra-fast scaling Terra opted for an almost fully-reflexive (more on reflexive later) system where the protocol could print new money without the need to inject exogenous collateral into the system — at the expense of (2) and (3), with the hope to rebalance the system at a later stage. In doing so, the system became victim of its own success — as we argued here and here and here. MakerDAO went the opposite direction, leaving money printing and burning to market dynamics — or to organic demand for leverage. Outstanding $DAI grew alongside crypto market liquidity, although way less than $UST, and shrank by 30-40% when liquidity dried up — still better than Terra’s negative 100%. Frax sits somewhere in the middle, capitalising on its peg volatility through Algorithmic Market Operations in order to attract assets. At $1.4b outstanding value, scaling up $FRAX has been painfully slow, and it wasn’t surprising that the protocol had decided to ally itself with Terra and their 4pool plan. The solution to the velocity conundrum might be, as usual, more boring than not: attract sticky demand for leverage with longer duration. MakerDAO has been trying to do it for months by linking up with real-world borrowers — so far mainly in real estate. Success would mean a larger stablecoin pool for $DAI, and a longer blended duration of the balance sheet. If done properly it would transform the protocol, if done poorly it would destroy it.

(2) Solvency → You can try to optimise it as much as you want but confidence around the viability of a currency will always be linked to the quality of the assets backing it — and to its governance mechanisms. Exogenous collateral makes for more reliant stablecoins as we will discuss later on. But nature of collateral is only part of the picture, quantum is the other part. Maker’s over-collateralisation model offered solid assurances to $DAI holders and kept the protocol alive beyond its most bumpy days. There has been a shared opinion in the market that an over-collateralised design makes it impossible to scale as it requires way too much collateral to mint currency. In my view this is just another meme that should require a dedicated entry on DR. DefiLlama indicates in $108.5b the total value locked in DeFi — for reference Chase’s total assets alone were c. $3.4t (30x DeFi’s) in 2020. With so much collateral to onboard to crypto it is not the numerator that makes the difference. In addition, by onboarding a more diversified collateral pool the blended over-collateralisation ratio could come down significantly by benefiting from lower collateralisation levels — this was one of the very first chapters of DR. The over-collateralisation problem is at least 20 years away.

(3) Liquidity → Even solvent, ultra-safe, stablecoin designs that sacrifice speed of deployment in exchange of extreme protectionism are continuously exposed to market forces. The relative value of an asset vs. another is a function of short-term supply and demand dynamics and in a pegged system there is little tolerance for volatility. Although solvency is, in my opinion, of paramount importance for currency design, given that we are assuming that each stablecoin is used as much for reserving as it is for payments — big assumption, their relative valuation at each point in time is important. The effectiveness of a stable’s adjustment mechanisms has nothing to do with their long term sustainability. $DAI, $UST, $FRAX, $USDC, have (had) different mechanisms to incentivise arbitrageurs to maintain the peg; $USDC by guaranteeing 1:1 fiat redeemability, $DAI through its PSM — and its billions of dollars of observable liquidity, $FRAX and $UST through Curve AMMs.

Comparing $DAI and $UST along the liquidity dimension is a good lesson on hubris and long-term thinking. While $DAI effectively preserved the value derived from the massive liquidity inflows of the ‘21 bull and the first phase of the ‘22 bear market in the form of excess $USDC available in the PSM, $UST de facto distributed such value to $LUNA holders during its expansionary phase. With excess secondary $UST demand pushing its value above the peg, the value was immediately used to burn $LUNA pushing up its price. When the cycle turned, all that excess value wasn’t stored in hard form (as it happened for $DAI) but distributed out to those who sold $LUNA in the uprising. Nansen published a great forensic study on Terra’s liquidity crisis. The TL;DR is that a small group of actors drained the liquidity pools Terra based its arbitraging activity on, ultimately with destructive and irreversible effects on the peg. Why irreversible? Because beyond the short term shocks driven by market dynamics it is the long term solvency (and governance) position of a stablecoin that matters.

Seven “initiating” wallets swapped significant amounts of UST vs other stablecoins on Curve as early as the night of May 7 (UTC). These seven wallets had withdrawn sizable amounts of UST from the Anchor protocol on May 7 and before (as early as April) and bridged UST to the Ethereum blockchain via Wormhole. Out of these seven wallets, six interacted with centralized exchanges to send more UST (supposedly for selling) or, for a subset of these, to send USDC that had been swapped from Curve’s liquidity pools.

My personal conclusions are the following:

There’s no superior design but actually trade-offs that should be considered based on the desired predominant use case of the stable

First-mover advantage is real to reach mass adoption — look at $USDT

There are however bad designs, that sacrifice Solvency (the core pillar) in exchange for Velocity

Every design includes some algorithmic aspect

The Real Deal: Reflexive vs. Anchored Stablecoin

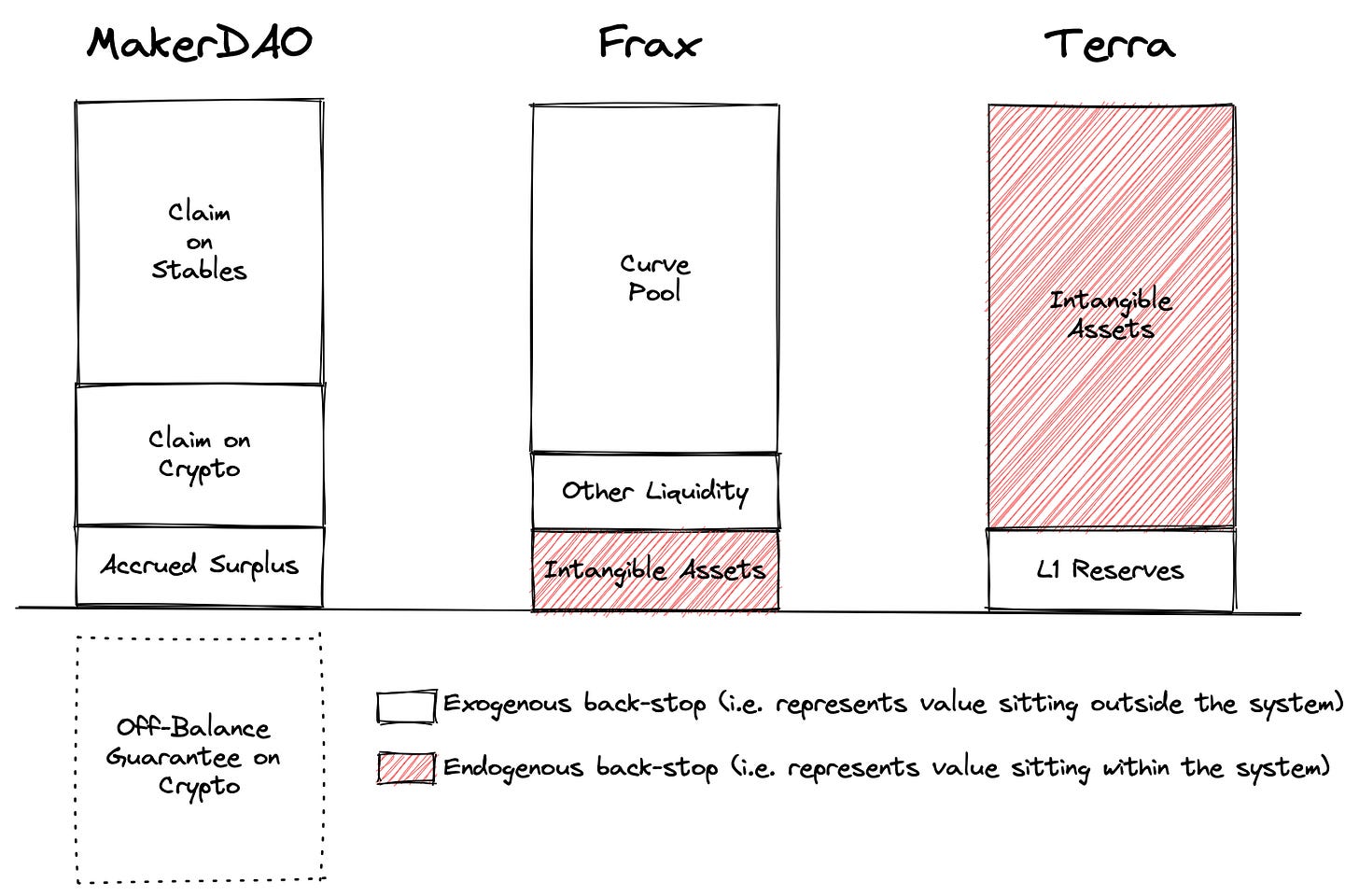

Back to solvency. In the chart below I compared the nature of each stablecoin’s backing, differentiating it between what depends on exogenous and on endogenous back-stop value. An asset-secured mortgage, or $ETH-backed money, would be a good example of exogenously secured money. On the other hand, value backed by expected future protocol profitability would be an alternative description of endogenously secured money instead. For a protocol whose future value depends on the viability of its main product (currency) it is easy to see how reflexive this type of backing is.

The exogeneity test → Defining what is exogenous and what is endogenous to a system is a tricky thing, as the boundaries of a system can get larger or narrower depending on the definition we adopt. $ETH, for example, is considered exogenous to the MakerDAO system in the chart above, but Maker is a project based on the Ethereum chain with currency printed through its technology; is $ETH truly exogenous then? I have a personal exogeneity test: is the project itself existential for the survival of such guarantee? If so, it is not an exogenous asset.

When a stablecoin (or currency in general) is backed for a significant portion by endogenous sources of value, I refer to such stable as a reflexive stablecoin. I call the other type anchored stablecoin. Reflexive coins scale up fast when liquidity flows in, and tend to implode even faster. This categorisation is also subject to the analyst’s discretion but here’s my take.

Each of the coins in the chart above has an algorithmic component, but that’s clearly not the differentiator. Simplifying and categorising is a very complex thing to do in a world as fluid and over-layered as DeFi, but if we really want to do it for explicability purposes, we should at least try to do it the right way.